In today's rapidly evolving financial landscape, the ability to efficiently collect, validate, and prepare data is paramount for maintaining a competitive edge within the Quant industry. At Sabr Research, we've developed a robust infrastructure for financial data collection and processing to orchestrate data ingestion from diverse sources, implement rigorous data quality checks, and prepare data for downstream machine learning models.

Scalability & Robustness from Day 1

When it comes to building data infrastructure, starting strong is non-negotiable. Scalability and robustness aren't features you add on later - they're principles that should guide design from the very beginning. By prioritizing scalable architecture from Day 1, you lay the groundwork for effortless expansion. Whether it's integrating new data sources, introducing additional validation checks, or layering in new analytical modules, a strong foundation ensures each new piece fits seamlessly into place. This is especially critical in fast-moving and changing environments.

Too often, short-term optimizations lead to long-term technical debt. Quick fixes may feel efficient, but they come at the expense of flexibility down the road. We've chosen to build with the long view in mind—investing in clean architecture and thoughtful design now, so we're not stuck paying down a costly mess later. We have used the AWS SDK stack to deploy our internal infrastructure-as-code setup not only to foster better collaboration within our team, but also to keep our systems organized and easy to grow. Creating a stack is simple, as shown here:

import * as cdk from 'aws-cdk-lib';

import * as lambda from 'aws-cdk-lib/aws-lambda';

export class DataPipelineStack extends cdk.Stack {

constructor(scope: cdk.Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

# ...

}

}Resources can be created through the use of helper functions to make the stack modular and easy to update and maintain. For example, many standard market-related data, such as EOD prices and the latest market news, can be collected independently and in parallel using simple Lambda functions. These functions can be modularized by leveraging helper functions like:

const makeLambda = (

logicalId: string,

name: string,

cmd: string[],

timeout: Duration,

memorySize: number,

envVars: { [key: string]: string }

) => {

return new lambda.DockerImageFunction(this, logicalId, {

functionName: name,

code: lambda.DockerImageCode.fromImageAsset('../', {

cmd: cmd,

}),

timeout: timeout,

memorySize: memorySize,

environment: {

BUCKET_NAME: ...envVars,

},

});

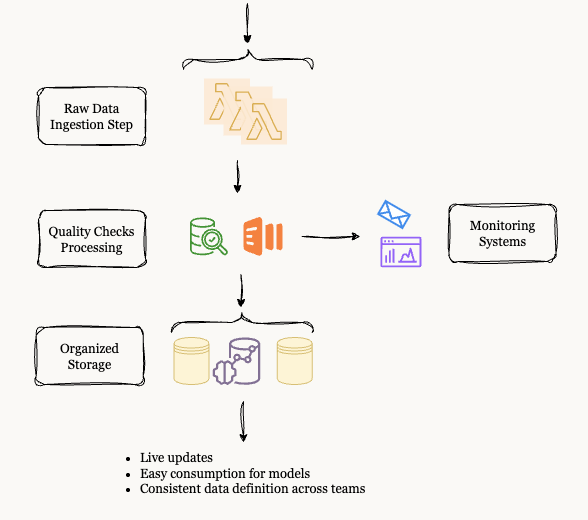

};Our data pipeline is a complex system in which multiple stages and logical steps are chained to form the overall flow.

Figure 1: Data Pipeline Flow Diagram

In practice this can be done through Step Functions, like so:

const parallelIngestion = new stepfunctions.Parallel(this, 'ParallelIngestion')

.branch(dataIngestionTask1)

.branch(dataIngestionTask2);

const aggregationStep = new stepfunctions.Pass(this, 'AggregateResults')

.next(new tasks.LambdaInvoke(this, 'DataAggregationTask'));

const dataProcessingFlow = aggregationStep

.next(qualityCheckTask)

.next(new stepfunctions.Choice(this, 'CheckQuality')

.when(stepfunctions.Condition.stringEquals('$.Payload.quality', 'good'), notifySuccessTask)

.otherwise(notifyFailureTask));

const definition = parallelIngestion

.next(dataProcessingFlow);

const stateMachine = new stepfunctions.StateMachine(this, 'DataPipelineStateMachine', {

definition,

timeout: Duration.minutes(timeout),

});The above standard allows for rapid deployment, version control, and consistent environments, ultimately reducing the risk of errors and improving overall system reliability. The intricacies of data cleaning, which address issues such as data organization, quality, metrics definition, and feature engineering, are also crucial for ensuring the reliability of data used in analysis. This is why we have a single common processing layer which harmonizes this process and enforces alignment across different use cases.

High-Quality Standards

At Sabr Research, maintaining high-quality data is essential, and we've put several practices in place to ensure its integrity throughout our pipeline. The above framework allows us to effectively meet that high standard, here are some of the key aspects we have carefully implemented:

Strict Standardized Tests

Strict, standardized tests for every feature we collect, ensuring that only accurate and consistent data moves through the system.

Automated Monitoring

Automated notification and alarm systems that quickly flag any anomaly, allowing us to take immediate action when something goes wrong.

Safe Experimentation

Multiple stages to support safe experimentation and development, so we can test new features or improvements without risking the integrity of our production environment.

Standardized Feature Stores

Standardized feature and target stores, which hold key features and metrics using a unified logic, critical for avoiding forward-looking bias in finance.

Ultimately, these measures allow us to collect and process data in a way that supports both accuracy and consistency, giving us the confidence to build reliable financial models while minimizing the risk of errors or bias.

Integrated & Intelligent

Our framework is designed to be both integrated and intelligent, streamlining the data processing and cleaning steps to support the development of machine learning models. By automating much of the data preparation work, it allows our science team to focus on building and refining models. The framework intelligently handles tasks like data validation, aggregation, and anomaly detection, so that clean, high-quality data is always ready for model training as well as critical inference phases. With this setup, the complexities of data cleaning are hidden from the model development process. We no longer have to spend time manually addressing missing values or dealing with inconsistent data formats. Instead, the framework ensures that every dataset is properly cleaned and pre-processed, allowing our scientists to concentrate on creating more accurate and effective models. This efficiency not only speeds up model development but also helps us deliver more reliable results, ensuring that our models are based on the best possible data.